A conversational design system

While natural language processing is a powerful tool, on its own, VUI isn’t enough to help customers effectively navigate their use cases. Great communication requires more than words - it combines data, visuals, and actions. This is a material design system case study.

AI conversational platform

Trying to get customer service via a chat window is often a terrible experience because the paradigm itself is broken.

Chat windows are rife with UX failures, a major one being that conversations aren’t linear, which means the chat timeline doesn’t align with the randomness of how people talk, and topics of conversation can quickly diverge. You end up with a mess of content that goes all over the place. How do we improve it?

How do we teach a machine to have thousands of natural conversations that make customer service better?

A great conversation is part science and part art form. We needed to build a team that could handle both the technical mechanics of the conversations and the overall user experience. For different use cases, some conversations are better had via voice, some by text, and some as a presentation with a voice-over.

This is case study highlights a “Material Design” system approach to product design; one of component libraries, design templates, documentation and guiding principles, functional guidelines, and usable code.

Material design systems became popularized by Google when they launched their Material Design System in June 2014.

I had been doing these systems as a graphic designer since the late nineties. My first large scale system was for AOL in 2006 when I helped them redesign their entire platform, (they still use the system today).

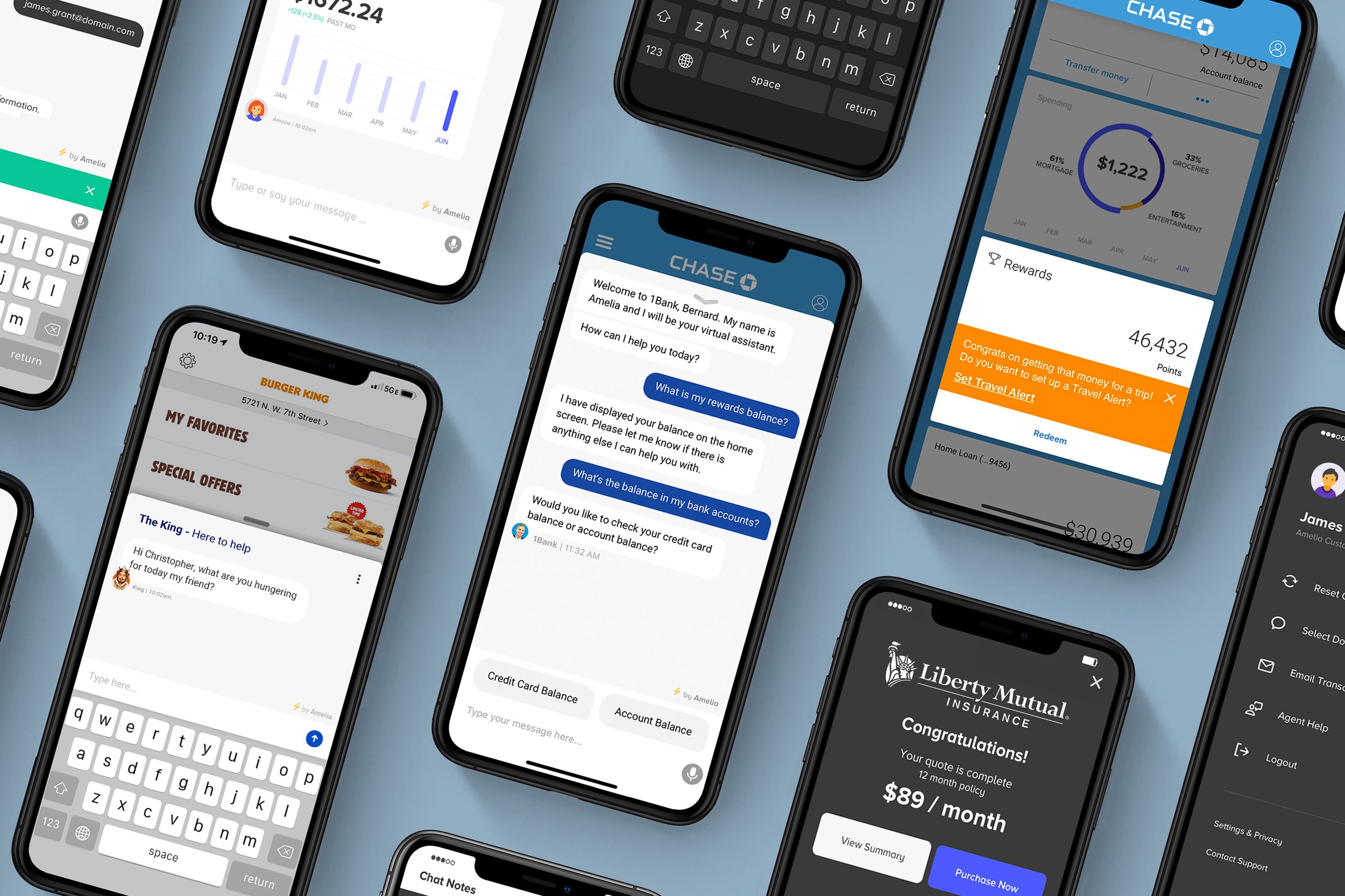

Sneak peek – The conversational platform enables thousands of experiences, in hundreds of languages.

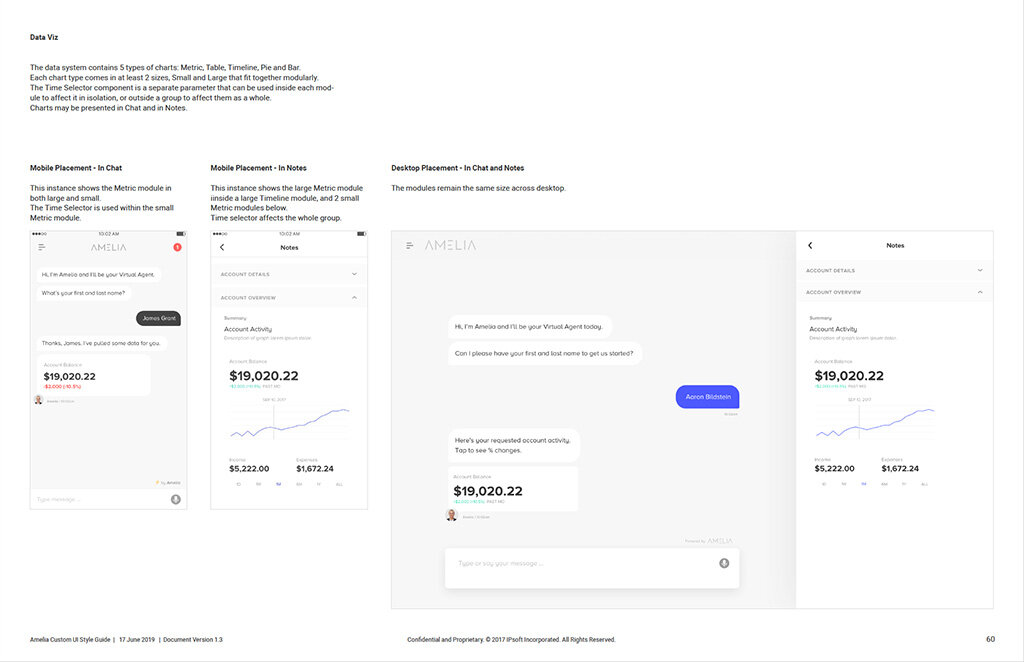

Amelia can present numerous UI components to assist comprehension, making the user experience familiar & efficient across touch points, and contexts.

Client’s across industries can customize their own unique experiences.

Available on iOS, Android and mobile web.

When I joined IPsoft in 2016, the company had rudimentary user experience and was predominantly run by the engineering team, their first iteration of AI algorithms could understand limited conversational norms.

I was asked to build a team to come up with the strategy and execute the design for a customer-facing product. My remit covered two main efforts;

Build a conversational platform.

Enhance Amelia’s conversational language.

Founded in 1998, IPsoft is a family owned company. The CEO created an automated IT service desk called IPcenter – running ‘Virtual engineers’ that maintain legacy systems. Amelia v1 launched in 2014 - “The worlds first chatbot.”

Amelia v.05

The state of the art, (prior to my joining the company).

The Turing Test

The CEO of IPsoft wanted to build the first AI to pass the Turing Test, a thought experiment that Alan Turing came up with in the 1950’s. He asked “Can machines think?”

The Turing test –

A test of a machine's ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. A human evaluator would judge natural language conversations between a human and a machine designed to generate human-like responses.

The evaluator would be aware that one of the two partners in conversation is a machine, and all participants would be separated from one another. The conversation would be limited to a text-only channel, such as a computer keyboard and screen, so the result would not depend on the machine's ability to render words as speech. If the evaluator cannot reliably tell the machine from the human, the machine is said to have passed the test. The test results do not depend on the machine's ability to give correct answers to questions, only on how closely its answers resemble those a human would give.

– Wikipedia

The CEO and I had many discussions about what passing the Turing Test meant specifically for Amelia. We eventually broke it down into 5 things (read about it in my Amelia avatar case study).

Making an AI appear, human isn’t easy, in fact, since the late 1960’s many have tried and failed. Early hype around the power of AI in the 1970-80s inflated expectations, and a billion-dollar industry soon emerged – and not three years later did it collapse.

At a public debate at the annual meeting of AAAI (American Association of Artificial Intelligence), Roger Schank and Marvin Minsky — two leading AI researchers — warned the business community that enthusiasm for AI had spiraled out of control in the 1980s and that disappointment would certainly follow. Three years later, the billion-dollar AI industry began to collapse.

– Wikipedia

Conversational mechanics

Amelia needed to act, sound, and feel like you’re talking with a real human being. To be successful, we had to deliver both a convincing conversation full of nuance and complexity, and a human experience that leveraged all the methods people use when sharing information.

Our approach consisted of 2 parts –

1) Deliver a conversation that adhere’s to the norms of social etiquette, supplies helpful relevant knowledge, and inevitably takes action.

The first point would be delivered by our Conversation Experience Designers, Intent Recognition Designers, Cognitive Solutions Architects, and Cognitive Implementation Engineers. They would work with client SME’s to uncover ideal use cases to develop, build out the business process flows (BPN’s), craft the natural language Amelia would use to talk with users, and then layer Intent and Entity training so that Amelia could comprehend what the user was saying.

2) Engage in conversations on all the platforms that people have conversations today.

For the second point we had to rethink what kinds of content Amelia would have to be able to handle, and build a means for her to locate and present the correct content in context to the users requests. – Human beings today don’t use just one means of communication when they talk with each other, especially when they are texting; they vary their responses between using text, photos, emoji’s, links, etc. Amelia therefore needed to behave in a similar way, sharing rich content as humans would.

Improving the delivery process

We created new roles to augment the technical delivery teams, improving the quality of the conversational experiences.

The process of change is never ending with Conversational AI because the technical capabilities keep strengthening, the use cases keep evolving, user expectations keep leveling up, and the number of verticals keeps growing. Ideally, the technology would be able to adapt dialog scripts across channels, so if you’re talking on the phone, the language and experience change because everything is verbal (no visual cues), but if you’re on a touch device, it can take advantage of voice/text/and visuals to make for a richer experience.

That automated capability is rudimentary in its current state, so we have to construct alternate versions of the experience that align with the user’s expectation for the channel they are using. We have various methods for writing the dialogs for text and voice and try to keep the experiences as similar as possible for service consistency. –

Note: Someone should work on a model that notices the differences between the spoken and written word, which can help parse the appropriate experiences in real-time.

The Solutions Delivery Group required us to create new roles in order to deliver high quality conversational experiences.

Cognitive experience design in action

A day in the life of the roles I created and managed. The team’s goals were to observe, understand, and codify an effective approach toward conversational experience design.

User Experience Designer (UXD)

“I start my day by interviewing end-users to uncover their needs in the problem space.”

“I create personas, and a happy path using prototypes to demonstrate my ideas.”

“I finalize my input with usability testing, to see if users find the solution useful.”

Conversational Experience Designer (CED)

“I collaborate with UXD on personas, and the dialog logic to enable a happy path.”

“I construct a conversational diagram to illustrate the supporting convo pathways.”

“I generate training data based on the intent architecture my IRD creates.”

Intent Recognition Designer (CED)

“I scope and map the happy path into an intent architecture.”

“I set up the way Amelia learns, because I best understand linguistics and the intricacies of the software.”

“I train, test, and measure Amelia’s recognition rate to ensure users can effectively communicate with her.”

CED’s & UX’ers working together to reduce the experience to the most convenient conversational flows as possible.

Multimodal messaging platform

The Customer UI. A mobile responsive front-end, available on: web/iOS/Android.

A zero to 1 messaging platform

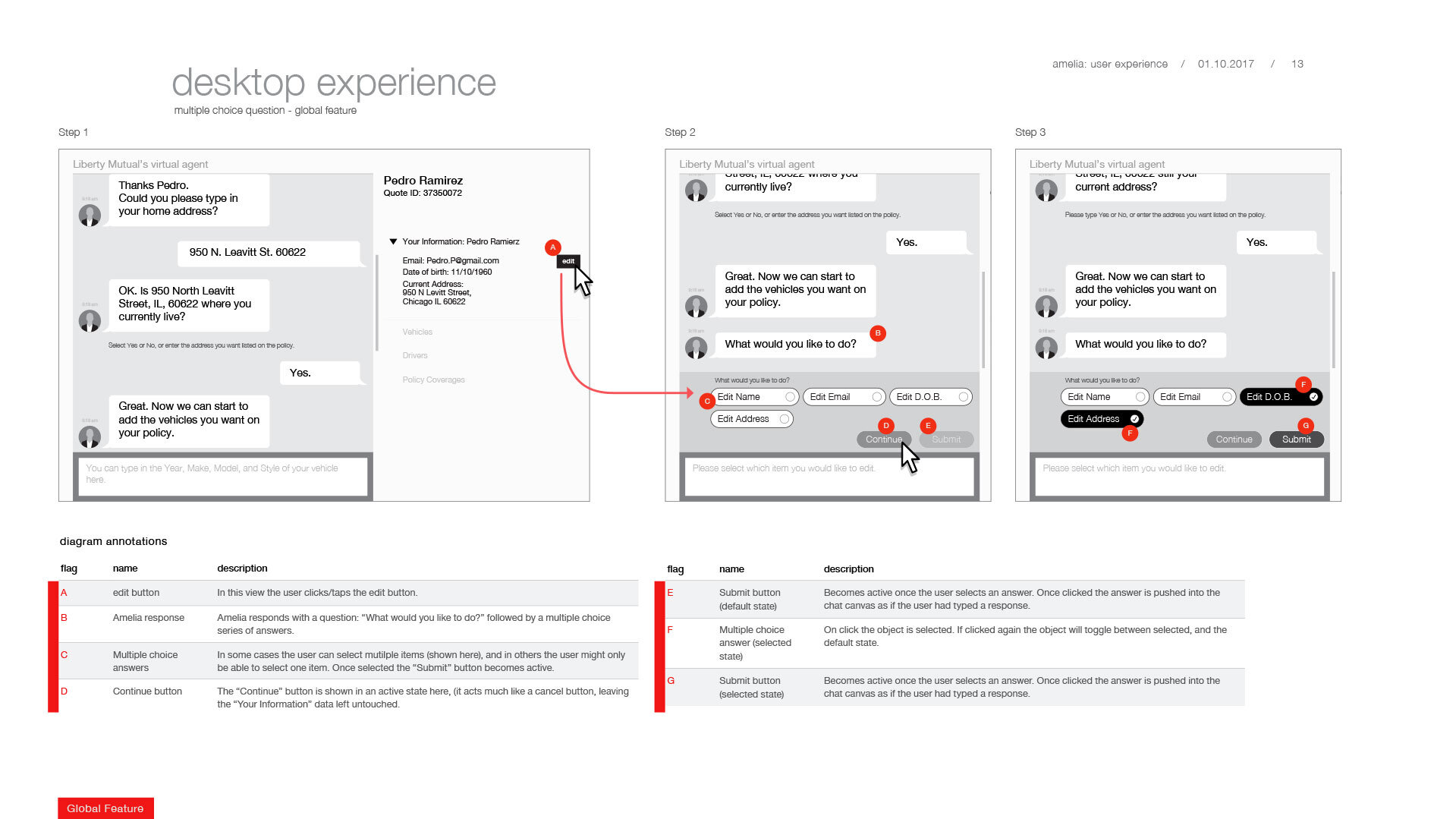

When I started the product design group the company did not have a clearly defined vision for Amelia. With few resources, I was asked to lead the efforts. I worked with clients, internal business stakeholders, R&D, and delivery teams to identify an MVP. We focused on one client, Liberty Mutual, as they were willing to push the envelope on use case selection and work with us to help define features.

We had to keep in mind that we’d eventually be designing a platform for every industry, so we had to be mindful of not making snow-flake specific features. We had to build industry-agnostic universal features that would work across numerous use-case scenarios and devices.

We created a user community that helped us not only design the Amelia messaging UI but also helped LM understand where their existing agents were missing the mark in terms of customer service excellence. We iterated with the engineering team using Invision prototypes to align on functionality.

The right-hand column feature (called the “Chat Notes”), required working with R&D on enhancing the AI platform. This breakthrough enabled other enhancements to Amelia, notably being able to go back in a conversation easily (technically called a “go-back”), edit responses in real-time without crashing the system, or skip questions based on logic statements, and allow the user to change the overall flow to their liking.

The below wireframes capture early discussions around features we could launch quickly and validate with a segment of their customers.

Making the design consumable to engineers & clients

Building a Storybook repository of components made it easier for UI Engineers & Cognitive Implementation Engineers to work together towards delivering a well designed Amelia experiences.

The library also helped our Linguistic team ideate around what elements could be used to supplement the conversational experiences across different user scenarios and device modalities. We created numerous Confluence pages with detailed instructions on how to build UI enabled experiences along with code examples, and WC3 accessibility guidelines.

Customer product guide

The product guide contains functional & visual design elements, along with best practices on language and VUX.

Component library

The code repository presented presents elements in a style guide format, making it easier for engineers & designers to collaborate.

We sat engineers with designers in order to make sure that everyone is on the same page in terms of sprint deliverables, functionality of each component, and whether something is achievable in code or not.

Custom icon library used across multiple products.

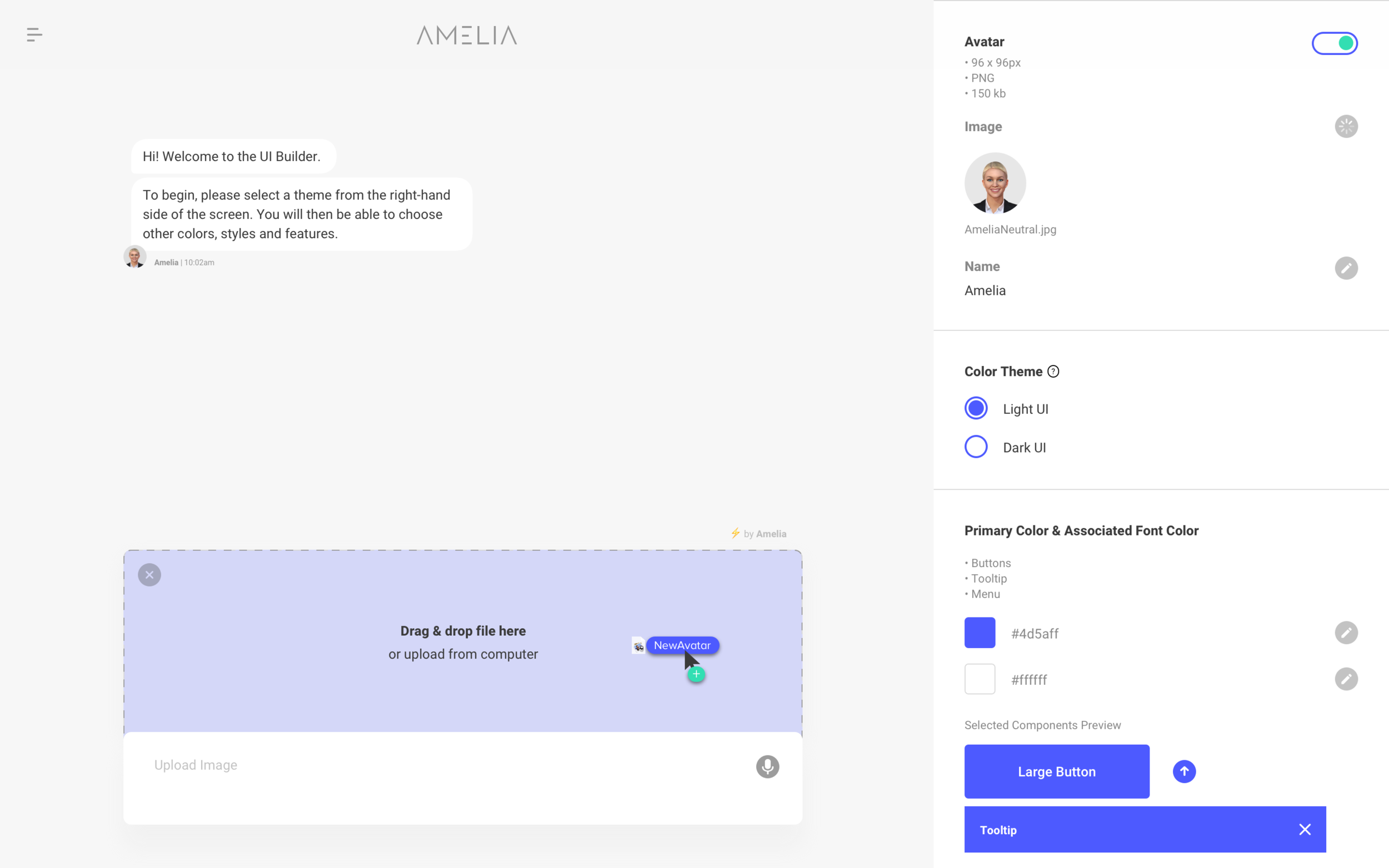

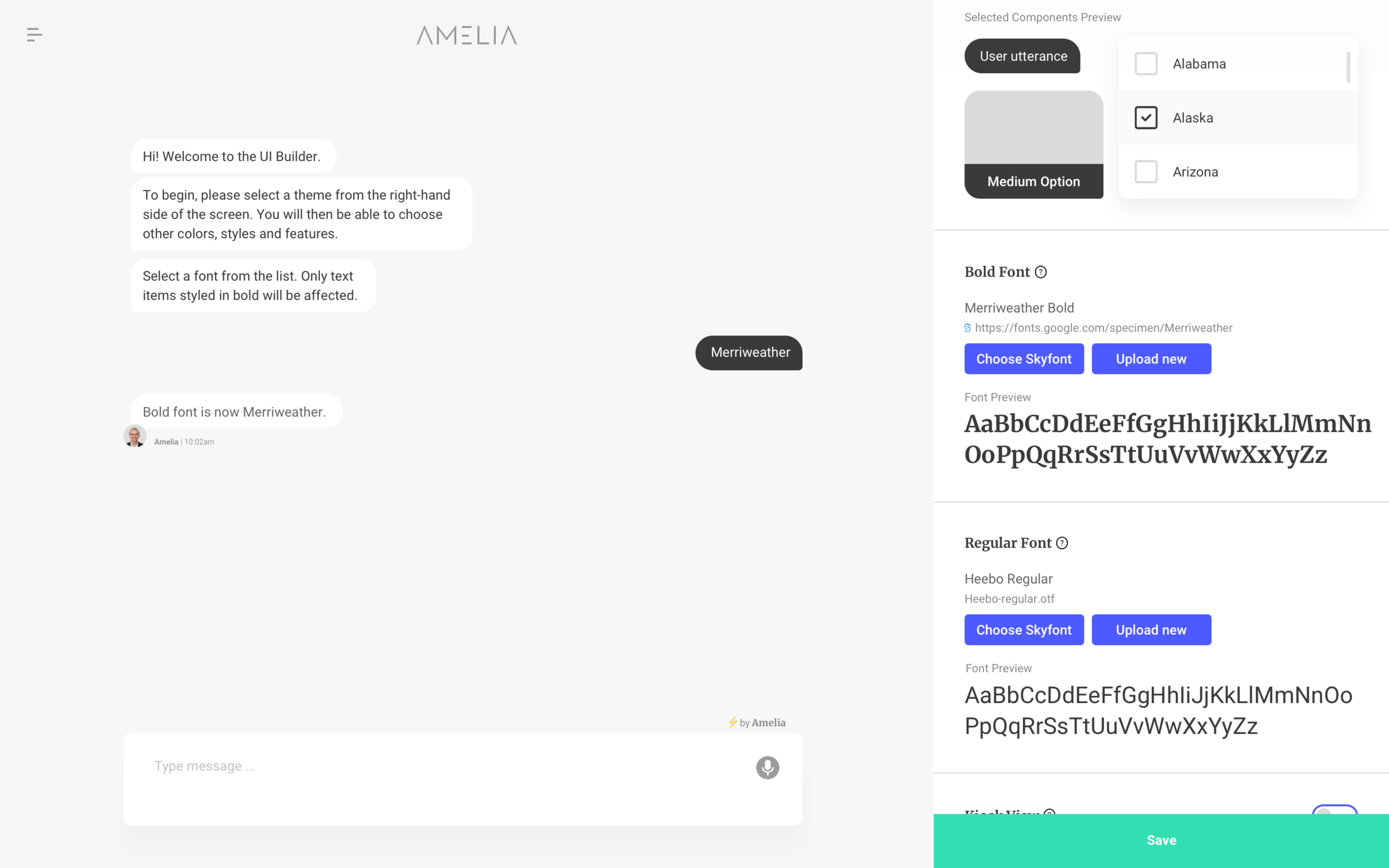

Customer UI Configurator

Customers can configure the UI to work on many platforms, giving them flexibility.

Amelia could be published as a desktop web page; integrate into existing portals or web sites, appear as a web page overlay, or be integrated into a mobile app, allowing Amelia to take control of the customer experience.

We built a WYSIWYG configurator app that let clients brand and stylize the entire experience; they could change the UI’s fonts, colors, button styles, icons, and a plethora of other attributes in a web browser before they went to production.

This is an early version of the configurator that shows some of the functionality. It has matured a lot since then, with Amelia taking on some of the role of configuration. Clients wanted a lot more control around their own branding, so we enhanced the configurator to give them total control. They also wanted to have more options for the avatar. We created an animated series for the chat, where the avatar can express animated emotions in the chat. We also created a randomizer that let clients create rules around what avatars could be shown, as they wanted to project a diverse set of AI avatars.

Custom chat avatars for Liberty Mutual. 10 expressions that change as needed to depict empathy during conversation.

UI chat overlay

Amelia can control the web experience, acting as an online shopping guide through purchase and returns.

Retail assistant functional prototype

Note that Elizabeth is clicking the microphone button in the UI and speaking in a natural way with the AI. She is not modulating her voice, or speaking in commands as with other NLU AI’s.

Adoption & investment

While clients can leverage Amelia’s API’s and build their own messaging UI’s, the conversational platform has been widely adopted across numerous verticals (with over 98% of our corporate clients using it), enabling them to scale customized, efficient services.

We are a small team (less than 20 people), yet our approach to designing and developing the UI has influenced the larger global R&D team, (over 400 engineers). By rapidly prototyping our features (testing them within our group and with client test users), we can gain alignment quickly and codify features across products.

Our material design system has become so popular that it is being used across product lines.

Designers from other groups are coming to us for guidance, and we hold group critiques now, (a foreign concept to a small family owned company).